This research presents a smart AI-driven microscope system designed for the high-precision characterization of 2D materials, particularly transition-metal dichalcogenides (TMDs). This transformative system introduces a generative deep learning-based image-to-image translation method, enabling high-throughput and automated TMD characterization. By integrating deep learning algorithms, it can analyze and interpret data from multiple imaging and spectroscopic techniques, including optical microscopy, Raman spectroscopy, and photoluminescence spectroscopy, without the need for extensive manual analysis. This invention bridges the fields of quantum technology and computer science by adopting an AI-based approach to characterize ultra-thin 2D materials, typically just one or two atoms thick. It paves the way for the development of the next generation of ultra-thin electronic devices, advanced sensors, and energy-efficient gadgets.

Novel Features of the AI-Driven Smart Microscope

This invention introduces several cutting-edge advancements in the automation of 2D material characterization, making it a game-changer in materials science and nanotechnology.

✅ Advanced Handling of Complex Structures – Accurately analyzes heterostructures and multi-layered 2D materials.

✅ Seamless Experimental Data Integration – Combines optical microscopy, Raman, and photoluminescence spectroscopy for a comprehensive material analysis.

✅ Ultra-Fast Processing Speed – Processes 100 optical images in just 30 seconds, delivering high-resolution insights in real time.

✅ “Super-Smart” Microscope Capabilities – Functions as an AI-powered microscope that enhances precision without manual intervention.

✅ Optimized for Small Data Sets – Unlike traditional deep convolutional networks, this model effectively generalizes even with limited training data.

✅ Layer Classification & Material Adaptability – Categorizes 2D material layers into five distinct classes, demonstrating adaptability across diverse material compositions.

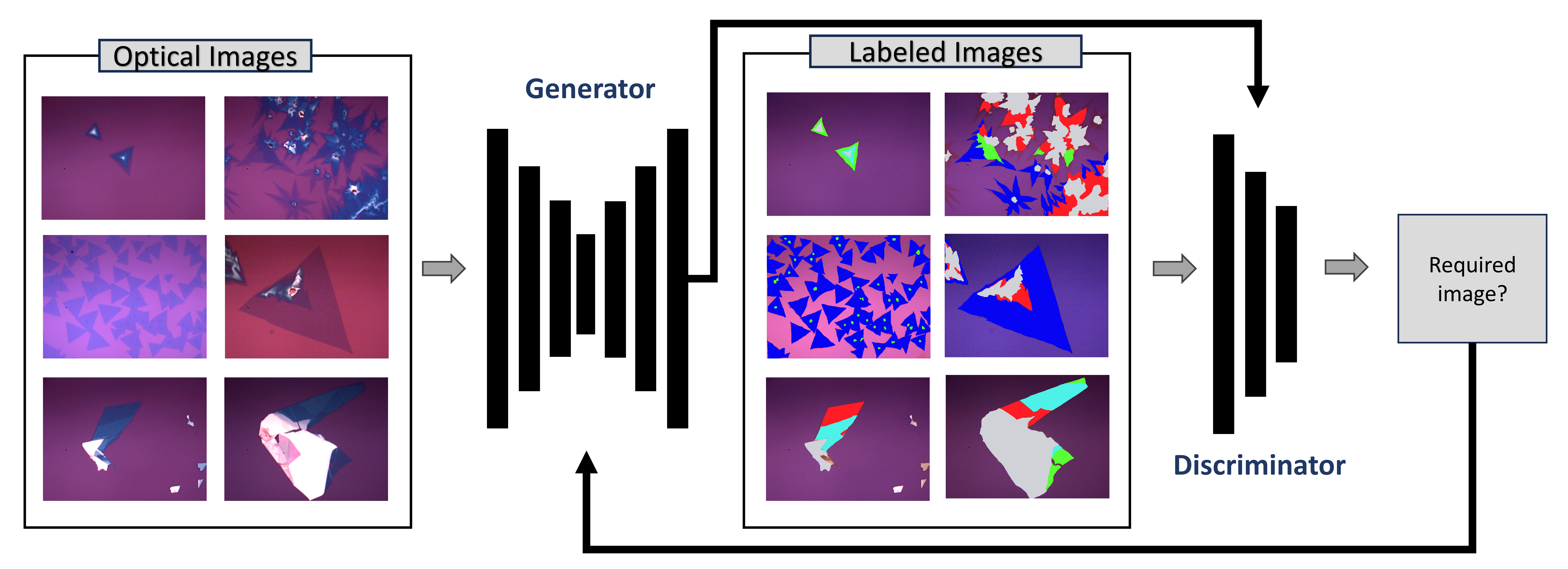

A DL-based pix2pix cGAN network has been demonstrated to identify and characterize TMDs with different layer numbers, sizes, and shapes. The DL-based pix2pix cGAN network was trained using a small set of labeled optical images, translating optical images of TMDs into labeled images that map each layer with a specific color and give a visual…

https://link.springer.com/article/10.1557/s43577-024-00741-6